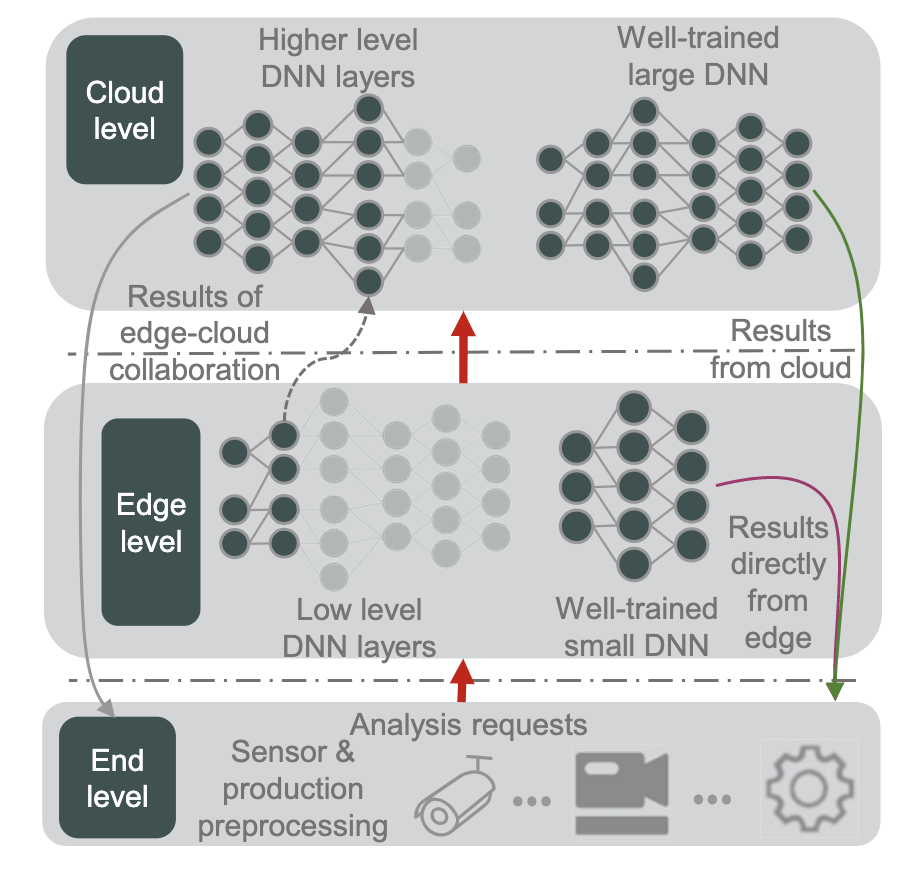

1. End Devices:

- Data Collection and Initial Processing: End devices, such as sensors, actuators, or mobile devices, collect raw data and perform initial preprocessing tasks like filtering, noise reduction, or basic feature extraction.

- Lightweight DNN Layers: These devices can host early layers of a DNN that are less computationally intensive, such as convolutional layers for image recognition or simple feature extraction layers.

- Edge Offloading: When computational demands exceed device capabilities or real-time processing is crucial, data and partial results can be offloaded to edge nodes for further processing.

2. Edge Nodes:

- Complementary Processing: Edge nodes, located closer to end devices, provide intermediate processing power and storage.

- Intermediate DNN Layers: Edge nodes can host more complex DNN layers, such as deeper convolutional layers, recurrent layers for sequential data, or initial decision-making layers.

- Local Inference and Decision-Making: They can perform inference tasks on locally collected data, reducing latency and network traffic.

- Cloud Offloading: For tasks requiring extensive computational resources or access to larger datasets, edge nodes can offload data and partial results to the cloud.

3. Cloud Data Centers:

- Centralized Hub: Cloud data centers offer vast computational resources, storage, and access to large-scale datasets.

- Complex DNN Layers: They host the most computationally demanding layers of a DNN, such as fully connected layers, attention mechanisms, or large language models.

- Model Training and Refinement: Cloud resources are used for training and refining DNN models using extensive datasets.

- Global Insights and Knowledge Sharing: Cloud-based models can aggregate insights from multiple edge devices and provide a global perspective for decision-making.

Benefits of Distributed DNN Architecture:

- Reduced Latency: Processing data closer to the source minimizes delays, essential for real-time applications.

- Bandwidth Conservation: Less data transmission to the cloud reduces network traffic and costs.

- Improved Privacy and Security: Sensitive data can be processed locally, reducing exposure to security risks.

- Enhanced Scalability: Edge nodes can handle increasing workloads, reducing reliance on centralized cloud infrastructure.

- Adaptability to Diverse Deployment Scenarios: The distribution can be tailored to specific network conditions, device capabilities, and application requirements.

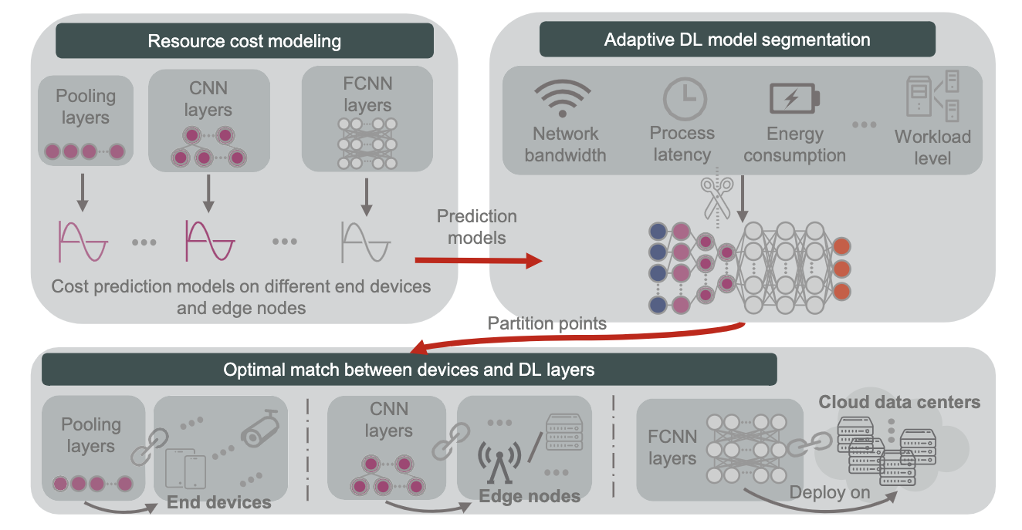

Key Considerations for Effective Distribution:

- Model Segmentation: Strategically dividing DNN layers across devices based on computational requirements and communication constraints.

- Model Compression and Pruning: Reducing model size and complexity for deployment on resource-constrained devices.

- Communication Optimization: Efficient data transfer and model updates between devices, potentially using techniques like federated learning.

- Resource Management: Balancing workload distribution and computational resources across devices to optimize performance and energy efficiency.